Sitemaps And Robots.Txt Files For SEO: Beginner Friendly Guide

Published March 11, 2024

In the digital landscape, dominating the competition and gaining a top spot on search engine result pages (SERPs) is the ultimate goal. Search engine optimization (SEO) is the strategic guide leading websites through the complex realm of online visibility. Picture a treasure map that directs and accelerates your journey to visibility—that’s where sitemaps and robots.txt files come in. They are the unsung heroes in the SEO narrative, providing a roadmap and rules for search engines.

In this article, we’ll explore the secrets of sitemap robots. We’ll see why they are essential and how they unlock the path to a more substantial online presence. Get ready to be captivated by the mystery and explore these indispensable tools that may hold the key to unleashing your site’s full potential.

A Glimpse Of Website Indexing And Crawling

Before you learn about robots sitemaps, there are two relevant terms you must be familiar with—website indexing and web crawling.

Web indexing is how search engines store and organize data about web pages. In simple terms, indexing is the essence of search engines. A website’s position in the index is determined by many SEO factors, ranging from keywords to relevance and content quality.

Crawling is the process where search engines look for web pages for indexing. Within each search engine, crawlers, also known as bots, play a crucial role. Their primary function is to navigate the web, searching for new content or web pages to include in their index. They achieve this by tracing the links provided on each webpage they encounter.

Understanding Sitemaps

A sitemap is a crucial SEO tool that’s a blueprint for your site. It details its structure and shows where all pages are located. Essentially, it’s a list of URLs you want search engines to explore and index.

There are two significant sitemaps: XML and HTML.

- XML sitemaps. Tailored for search engines, an XML sitemap offers a structured list of all your site’s pages. It also provides additional details such as the last update date, frequency of changes, and page importance. The data aids search engines in executing competent website crawling.

- HTML sitemaps. In contrast to XML sitemaps, HTML sitemaps are predominantly user-focused. They present a site overview on a single page, facilitating visitor navigation. HTML sitemaps can also assist search engines in discovering your site’s pages.

Sitemaps are especially vital for large websites, new sites, those with numerous unlinked pages, or those with frequent updates.

An efficient sitemap should cover all pages you want to be crawled and indexed. Omit duplicate content and pages you wish to keep hidden from search engines. Once your sitemap is done, submit it to search engines through their webmaster tools, like Google Search Console or Bing Webmaster Tools.

The Role Of Sitemaps In SEO

Sitemaps are vital for SEO. They act as a roadmap that directs search engines to critical pages on your website. They function as a blueprint, aiding search engines in discovering, crawling, and indexing all your site’s content.

- Enhanced site crawling. Sitemaps lead search engine bots through your site, simplifying finding and crawling all pages. This is crucial for large sites with many pages or intricate navigation.

- Rapid indexing. Sitemaps speed up the indexing of all your pages by listing all your site’s URLs. This proves helpful for new sites seeking prompt recognition from search engines.

- Page prioritization. Sitemaps enable you to assign priority levels to pages, informing search engines about key content and your site’s structure.

- Content updates. Sitemaps help search engines detect changes quickly when adding or changing content, keeping your site current in search databases.

- Optimized visibility for media-rich content. Sitemaps boost search engine visibility for media-heavy sites with videos or images, raising the chances of ranking in image or video searches.

- Improved user experience. HTML sitemaps aid user navigation, resulting in a superior user experience. This indirectly benefits SEO, as search engines favor user-friendly sites.

- Error detection and resolution. Sitemaps assist in identifying broken links or errors. Regularly monitoring your sitemap ensures all pages are accessible and error-free, which is crucial for solid SEO practices.

Understanding Robots.txt Files

A robots.txt file is a basic text document website owners use to direct web robots. In most cases, these are search engine robots directed at navigating their web pages. It’s part of the robots exclusion protocol (REP), a set of web standards for how robots browse the web, access and index content, and deliver it to users.

It is in a website’s root directory. The robots.txt file tells search engines which sections of the site are not to be explored or scanned. For example, you may want to exclude some pages from search results or save your site’s bandwidth by telling bots not to crawl certain sections.

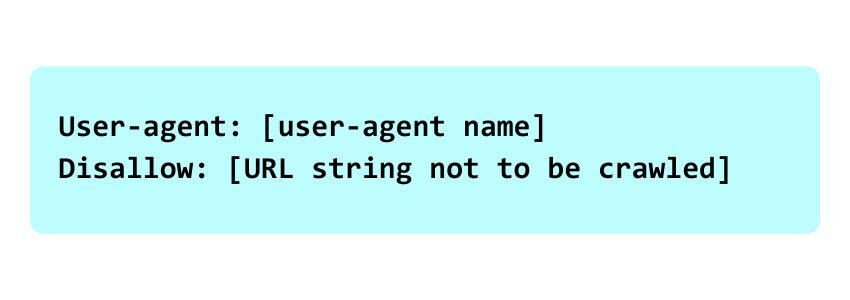

Here is the basic format of a robots.txt file:

“User-agent” denotes the web crawler you are directing for crawling instructions, such as Googlebot for Google. “Disallow” instructs the robot to refrain from crawling pages containing the specified URL string. Alternatively, you can use “Allow” to grant robots access to a subdirectory or page, even if the parent directory is restricted.

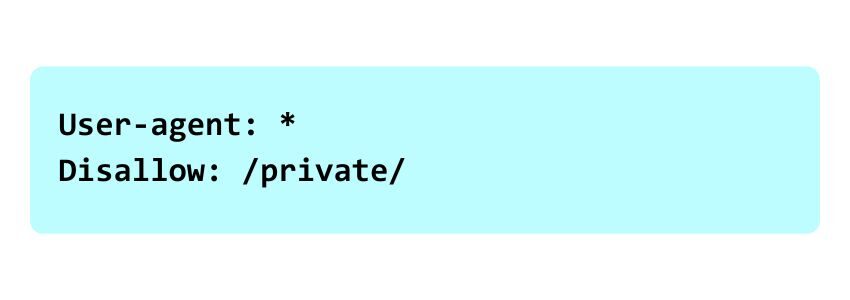

For example:

In the example above, all bots (represented by the asterisk *) are instructed not to access any pages within the ‘private’ directory. Remember, the directives in robots.txt files are guidelines that can be disregarded by specific bots, particularly those with malicious intent.

Thus, it is crucial to secure sensitive data properly rather than solely relying on the robots.txt file for concealment. Use robots.txt files with other SEO tools like sitemaps. Sitemaps direct bots to pages for indexing. This combo enhances your site’s interaction with web crawlers and boosts its online visibility.

The Role Of Robots.txt Files In SEO

Robot.txt files are crucial in SEO, influencing your website’s SEO performance. Here’s a breakdown of the critical functions of robots.txt files in SEO:

- Guiding search engine crawlers. Robots.txt sitemaps direct search engine crawlers on which website sections to crawl and index and which to disregard.

- Conserving crawl budget. You can save your site’s crawl budget by excluding pages from crawling. This ensures search engines index important, high-quality pages.

- Preventing indexing of specific pages. If you have pages you wish to exclude from search results, robots.txt files can prevent search engines from indexing them.

- Managing website traffic. Regulating the crawl rate helps control website traffic flow. It prevents too many requests and keeps the site fast.

- Directing site authority flow. By disallowing certain pages, you can direct ‘link juice’ or site authority to pages you aim to boost in search rankings.

- Enhancing site security. While not a direct SEO advantage, disallowing access to sensitive site areas contributes to site security.

- Exercising search engine crawling control. Robots.txt files give site owners control over search engine interactions, helping to improve site visibility and ranking.

How To Create And Submit Sitemaps

Making a robots.txt sitemap involves several steps. They help guide search engine bots on how to engage with your site. Here’s a guide on creating and implementing a robots.txt file:

- Creating a robots.txt file. Generate a new robots.txt file using a plain text editor like Notepad or TextEdit. Utilize a plain text editor rather than a word processor to prevent saving files in a format web robots cannot interpret.

- Setting your User-agent. The “User-agent” within a robots.txt file specifies the particular web crawler to which the instructions pertain. For instance, “User-agent: Googlebot” is specific to Google’s robot, while “User-agent:*” applies universally to all robots.

- Establish guidelines for the robots.txt file. The primary directives to include in your robots.txt file are “Disallow” and “Allow.” “Disallow” instructs the robot to refrain from crawling a specific page or directory. At the same time, “Allow” grants the robot permission to access a page or directory, even if its parent directory is disallowed.

- Upload your robots.txt file. Once you have created your robots.txt file, the next step is to upload it to your website’s root directory. This directory is typically where your site’s main index.html file is located.

- Verify your robots.txt file. After uploading your robots.txt file, ensuring it functions as intended is vital. You can do this by entering your root domain followed by /robots.txt. For example, if your site were www.example.com, you would enter www.example.com/robots.txt in your browser’s address bar.

Navigating With Sitemaps And Robots.txt

In the world of SEO, visibility sets the beat for success. Sitemaps and robots.txt files take the stage as the conductors of digital prominence. As we end our journey, remember that mastering these tools isn’t a choice. It’s the key to leading your website on the grand SERP stage. With knowledge of sitemap robots, you can see your site climb the ranks. It’s time to guide your website into the limelight.

Hire The Digital Marketing Experts

We take online businesses and turn them into online empires by employing smart digital marketing strategies. Our team of experts are trained in a myriad of marketing skill including SEO to help you rank higher in search results, and ad management to ensure your message gets seen by the people you want. Need a business website that attracts business? We also specialize in website design and online sales optimization to help your business grow like never before.

This Content Has Been Reviewed For Accuracy By Experts

Our internal team of experts has fact-checked this content. Learn more about the editorial standard for our website here.

About The Author

Hi, I’m Corinne Grace! As an experienced writer holding a bachelor’s degree from Riverside College, I excel in creating articles supported by thorough research. Specializing in a wide range of topics like marketing and law, I craft engaging stories that connect with my readers. I continuously work to refine my skills to adapt to the ever-changing digital world.